Take a Closer Look at Voracity

Inside the Power of the Platform

Volume

Data sources from internal and public sources is growing exponentially.

Variety

The myriad of structured and unstructured sources is beyond most tools.

Can your tools handle tomorrow´s loads?

Prepare big data subsets for analytics fast by accelerating and combining transforms in your file system -- not in the BI or DB layer. Use Voracity to de-duplicate and filter, sort and join, aggregate and segment, reformat and wrangle data all in one pass. Create reports on the fly as part of the process, too, with embedded BI. Or, send prepared data in memory to BIRT, Datadog, KNIME or Splunk in real-time, or into cubes that your app wants. Otherwise, hand-off wrangled flat-files or RDB view tables for use in those tool, as well as Business Objects, Cognos, Cubeware, iDashboards, Microstrategy, OAC/OBIEE/ODV, PowerBI, QlikView, R, Splunk, Spotfire or Tableau, speeding time-to-display.

Can you acquire and mashup internal and external sources in one place?

What tools are you using now to discover, extract, process, and analyze all the data you gather or buy? Can you reach and process it all in one pane of glass? Can you quality-control and manage its metadata and master data in that same place? Can you analyze the data there too, or at least rapidly integrate and prepare it for external applications? If you use multiple tools, can you manage the expertise they require? Or if you use a legacy ETL platform, can you bear its cost?

The CoSort engine in Voracity processed big data long before it was called big data, running and combining multi-gigabyte transforms in seconds, and besting 3rd-party sort, BI, DB, and ETL tools 2-20X. And when IRI turned 40 in 2018, DW industry guru Dr. Barry Devlin declared Voracity to be a production analytic platform. Learn why here.

And now there are Hadoop options in Voracity too, distributing and scaling huge workloads across commodity hardware via MapReduce 2, Spark, Spark Stream, Storm, and Tez.

Voracity analyzes, integrates, migrates, governs, profiles, and connects to some 150 different data sources and targets ... structured, semi-structured, and unstructured.

That includes legacy files, data and endiantypes, as well as popular flat and document file formats, every RDBMS, and newer big data and cloud/SaaS sources.

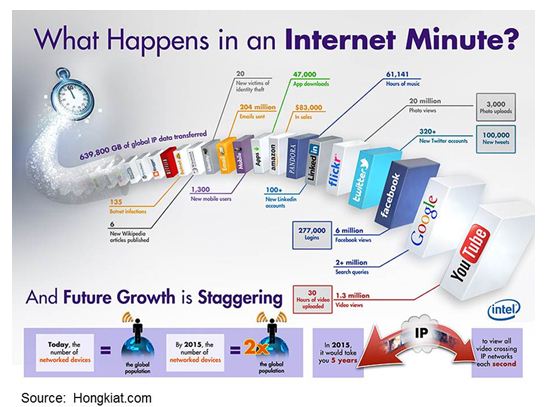

Velocity

CDR, IoT, social, and other data come fast, and at different intervals.

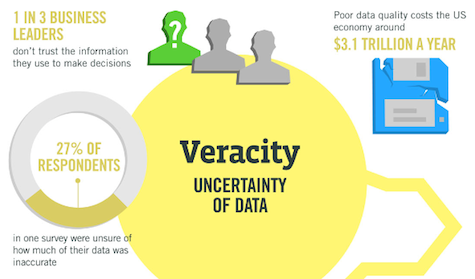

Veracity

Low quality data jeopardizes apps and analytic value. PII is another data risk.

Are you ready for streaming, near-real-time, and batched data?

The biggest data volumes are still processed in regular batch cycles, something Voracity's native CoSort and Hadoop MapReduce and Tez options will optimize. But what about the need to process (transform, mask, reformat) and analyze data in real-time for instant promotional campaigns (think mobile devices), or alerts (like traffic and weather notices) that can help drivers or event-goers?

How do you maintain the reliability and security of your data?

Garbage in = garbage out, and thus data in doubt. Data quality suffers from inconsistent, inaccurate, or incomplete values. Social media data can be deceptive, unstructured data imprecise, and data ambiguity plagues MDM. Survey data can be biased, noisy or abnormal. Meanwhile PII and secrets contained in all that data mean you have to mask it prior to shared use. Do you have a central point of control for cleaning data and making it safe?

Voracity includes CoSort to integrate data in memory and files, so you can process big data 6X faster than ETL tools, 10X faster than SQL, and 20X faster than BI/analytic tools. Its typical mode, including CDC, is batch.

Voracity can process real-time, near-real-time, and streaming data through Kafka or MQTT brokers, in memory via pipes or input procedures to CoSort, or in Hadoop Spark or Storm engines ... all from the same Eclipse GUI, IRI Workbench. Other options include using the built-in job launcher to spawn Voracity jobs in near-real-time intervals, or using specialized BAM or CEP tools for managing event-driven activity.

Voracity's data discovery, fuzzy matching, value validation, scrubbing, encrichment, and unification features all improve data quality.

Voracity's comprehensive data masking functions and synthetic test data generation capabilities remove the risk of data breaches and poor prototypes.

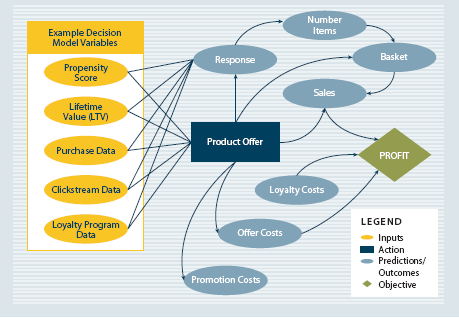

Value

And the point of it all ... getting analytic value from big data.

Are you getting the insights you need to make decisions?

Consider your information and decision needs from data. For example, are you tracking consumer behavior, weather patterns, device or web log activity so that you can change promotions, make predictions, or diagnose problems? Do you see the value in an IDE easy enough for self-service data preparation and presentation, but powerful enough for IT and business user collaboration in data lifecycle management? And if you use BIRT, KNIME or Splunk, can you get data into those structures AS it's being wrangled?